FaceTime Like a Pro

Get our exclusive Ultimate FaceTime Guide 📚 — absolutely FREE when you sign up for our newsletter below.

FaceTime Like a Pro

Get our exclusive Ultimate FaceTime Guide 📚 — absolutely FREE when you sign up for our newsletter below.

Run gpt-oss-20b (ChatGPT) locally on your Mac. Learn how to install and chat offline without internet or cloud access.

Until now, you could run many AI models offline on your Mac without the internet, but ChatGPT wasn’t one of them. That changes with the release of gpt-oss. This open version of ChatGPT has its weights publicly available and can be freely downloaded from the Hugging Face platform.

OpenAI has released two versions: the powerful gpt-oss-120b for high-end GPUs, and the lighter gpt-oss-20b, which works smoothly on a Mac with 16GB of RAM. The smaller model is perfect for Apple Silicon Macs (M1, M2, M3), making it accessible to most users.

We’ll be running the lighter gpt-oss-20b model locally. And here are the requirements to run it on your Mac. If you’re wondering, yes, you can install these models on Windows and Linux too. You can also use the ChatGPT app on Mac for a more native experience if you prefer OpenAI’s official tool.

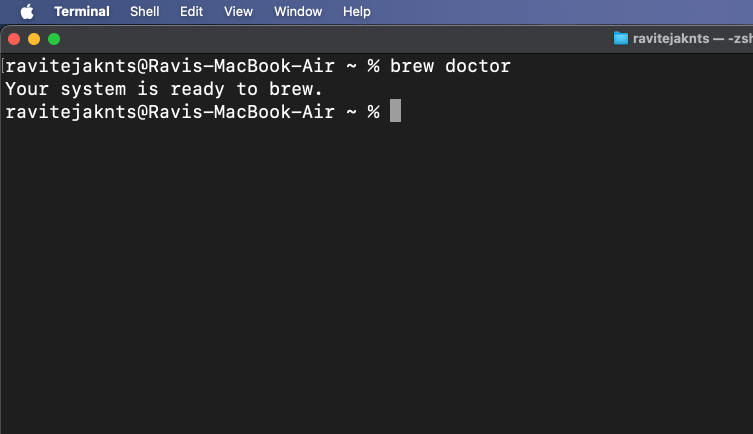

Homebrew is a package manager that lets you install software easily via Terminal.

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"brew doctorIf it says Your system is ready to brew, you’re set.

If you’re having any issues or want a more detailed step-by-step process, check out our guide on how to install Homebrew on a Mac.

Ollama is the easiest way to run AI models locally.

brew install ollamaollama serveKeep this running in the background.

Alternatively, download the Ollama app from its website, install it as you would any other Mac app, and open it.

Now that Ollama is installed and running, it’s time to bring the gpt-oss-20b model onto your Mac. This step will download the model and prepare it for offline use.

ollama pull gpt-oss:20bollama run gpt-oss:20bYou’ll now see a prompt where you can chat directly.

Type your message and press Return. Responses will appear right below. To exit, press Control + D. To restart later, use:

ollama run gpt-oss:20b

With the recent update to Ollama, you now have a clean chat interface. Just open the Ollama app, tap the Ollama icon in the menu bar, and click on the Open Ollama option.

This will open a chat interface. Simply select the new gpt-oss 20b model from the model selection drop-down and start chatting.

Once you have the model running, it’s useful to keep track of what’s installed and remove any models you don’t need. Here’s how you can manage them:

ollama listollama rm gpt-oss:20bIf you have installed Ollama through Brew (Ollama chat will not be available) or you prefer to use the Open WebUI version with Docker, you can follow the steps below:

docker --versiondocker pull ghcr.io/open-webui/open-webui:maindocker run -d -p 9783:8080 -v open-webui:/app/backend/data --name open-webui ghcr.io/open-webui/open-webui:mainhttp://localhost:9783/Create an account, and start chatting in a clean, browser-based interface.

If you prefer not to use Ollama, there are other methods from Hugging Face:

Best for developers comfortable with Python who want full control over prompts, reasoning levels, and integration into apps. Great for research or building custom AI workflows.

pip install -U transformers kernels torchfrom transformers import pipeline

pipe = pipeline("text-generation", model="openai/gpt-oss-20b", torch_dtype="auto", device_map="auto")

outputs = pipe([{ "role": "user", "content": "Explain quantum mechanics clearly."}], max_new_tokens=256)

print(outputs[0]["generated_text"][-1])Ideal for running an OpenAI-compatible API locally. Perfect if you want to connect local models to apps expecting an OpenAI endpoint.

uv pip install --pre vllm==0.10.1+gptoss --extra-index-url https://wheels.vllm.ai/gpt-oss/vllm serve openai/gpt-oss-20bGreat for users who want a clean GUI without messing with Docker. An easy way to download and run models visually.

Use this command:

lms get openai/gpt-oss-20bBest for advanced users who want direct access to raw model weights for custom deployment, fine-tuning, or experiments.

Download weights:

huggingface-cli download openai/gpt-oss-20b --include "original/*" --local-dir gpt-oss-20b/That’s it. You now have ChatGPT-like AI running locally on your Mac, fully offline. No cloud, no accounts, no data leaving your machine. Whether it’s drafting emails, brainstorming, or experimenting with AI, gpt-oss and Ollama make it private, fast, and yours.

Don’t miss these related reads: