FaceTime Like a Pro

Get our exclusive Ultimate FaceTime Guide 📚 — absolutely FREE when you sign up for our newsletter below.

FaceTime Like a Pro

Get our exclusive Ultimate FaceTime Guide 📚 — absolutely FREE when you sign up for our newsletter below.

Visual Intelligence makes your iPhone smarter. Point your camera to identify, translate, or ask ChatGPT on the spot.

Apple first introduced Visual Intelligence with iOS 18.2, a feature that could recognize what your iPhone’s camera saw in real time. With iOS 26, Apple takes it a step further. Visual Intelligence can now analyze both what’s in front of your camera and anything displayed on your screen. That means you can identify objects, translate text, shop online, or even ask ChatGPT questions about what you see.

Here’s a full guide on how to use Visual Intelligence on your iPhone.

Visual Intelligence is an Apple Intelligence-powered feature that combines on-device AI with real-time visual understanding. It debuted with the iPhone 16 series and requires at least an A17 Pro chip with an advanced Neural Engine for fast, private processing.

With it, your iPhone can recognize and extract details from what your camera or screen shows, from identifying a plant to translating a menu, finding products online, or getting instant help from ChatGPT.

Visual Intelligence is part of Apple Intelligence, available on the following models:

iPhone 15 Pro, 15 Pro Max, iPhone 16, 16 Plus, 16e, 16 Pro, 16 Pro Max, iPhone 17, 17 Pro, 17 Pro Max, and iPhone Air.

From learning about nearby businesses to translating text or chatting with ChatGPT, Visual Intelligence can be your everyday AI assistant. Here’s how to use it.

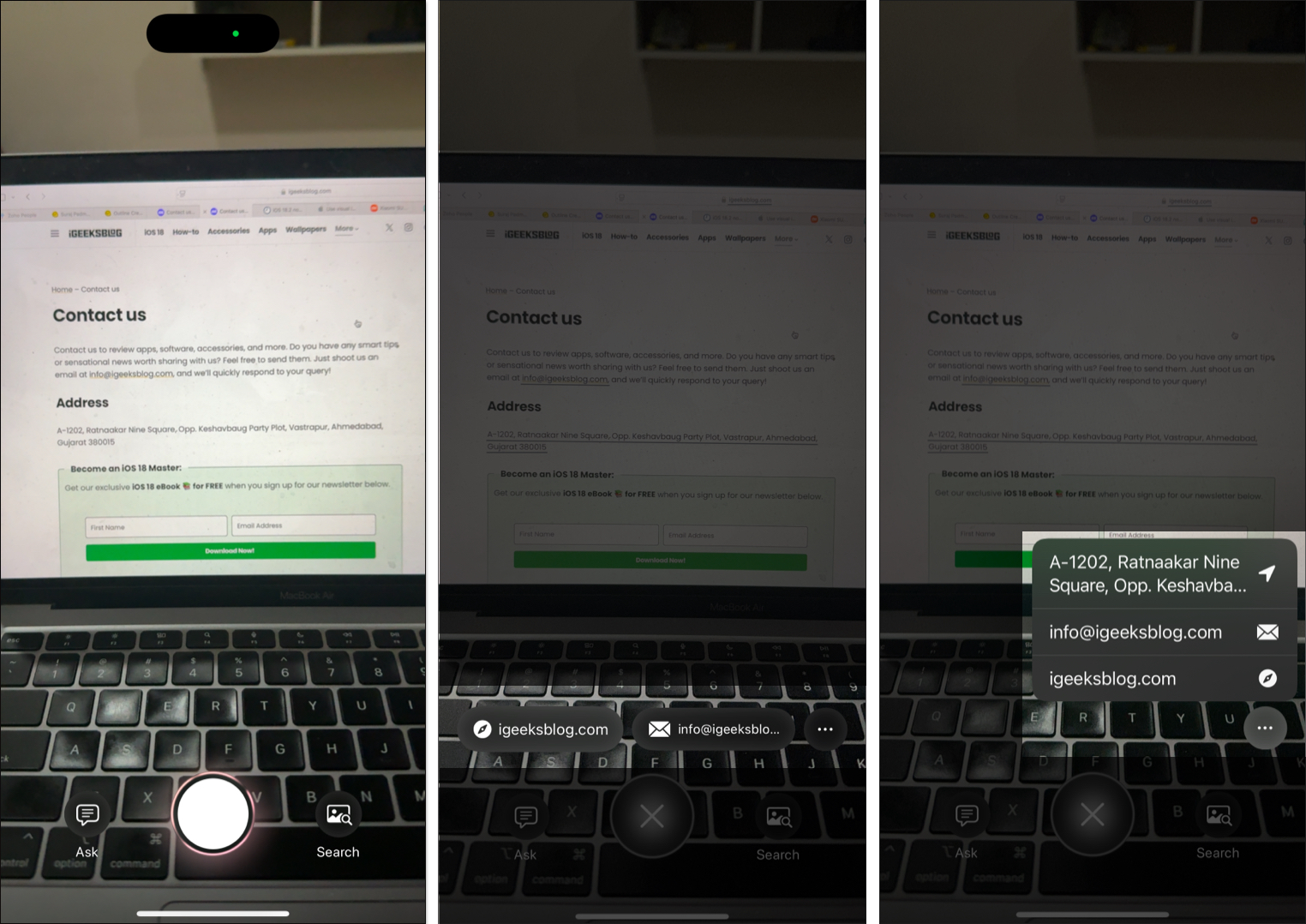

Visual Intelligence can show you business hours, menus, and contact details just by pointing your camera.

Besides these actions, you’ll also find options to call the business, view its website, and more.

Visual Intelligence brings Live Text to a new level. Aim your camera at any printed or on-screen text, and your iPhone can instantly summarize, translate, or act on it.

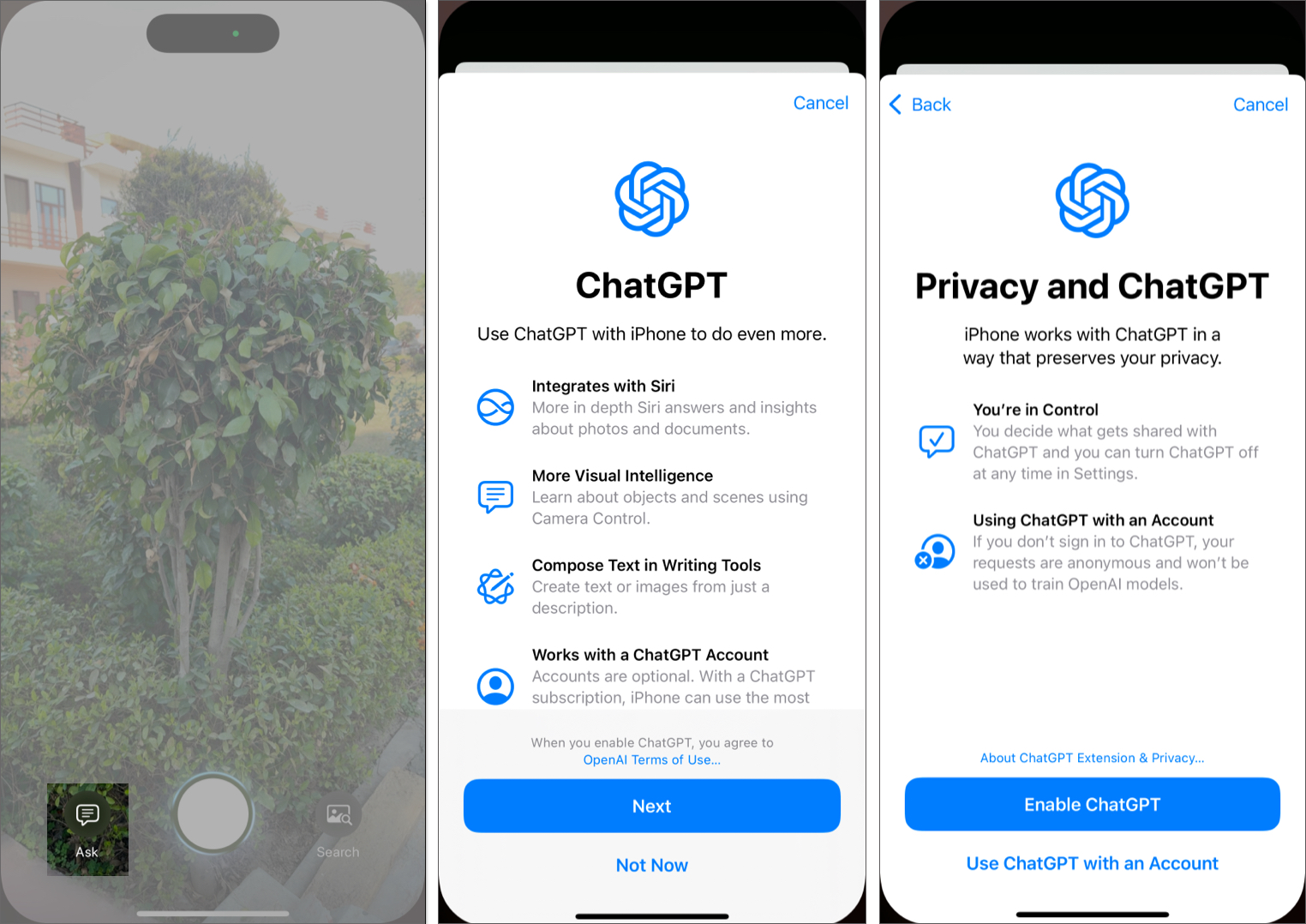

Apple’s ChatGPT integration in iOS 18 makes Visual Intelligence even smarter. You can ask detailed follow-up questions about anything your camera sees.

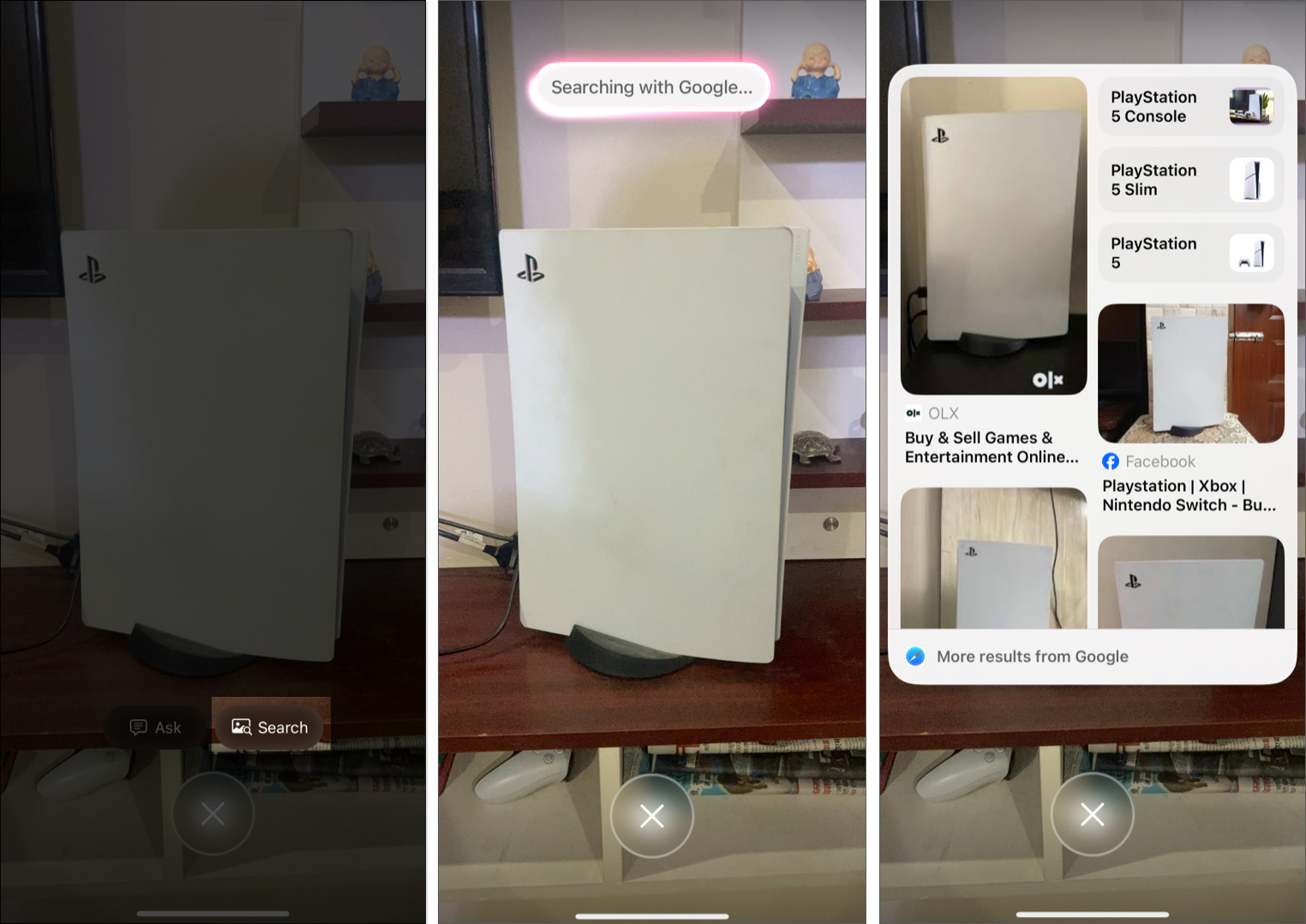

Want to find similar items online? Visual Intelligence can reverse-image search using Google.

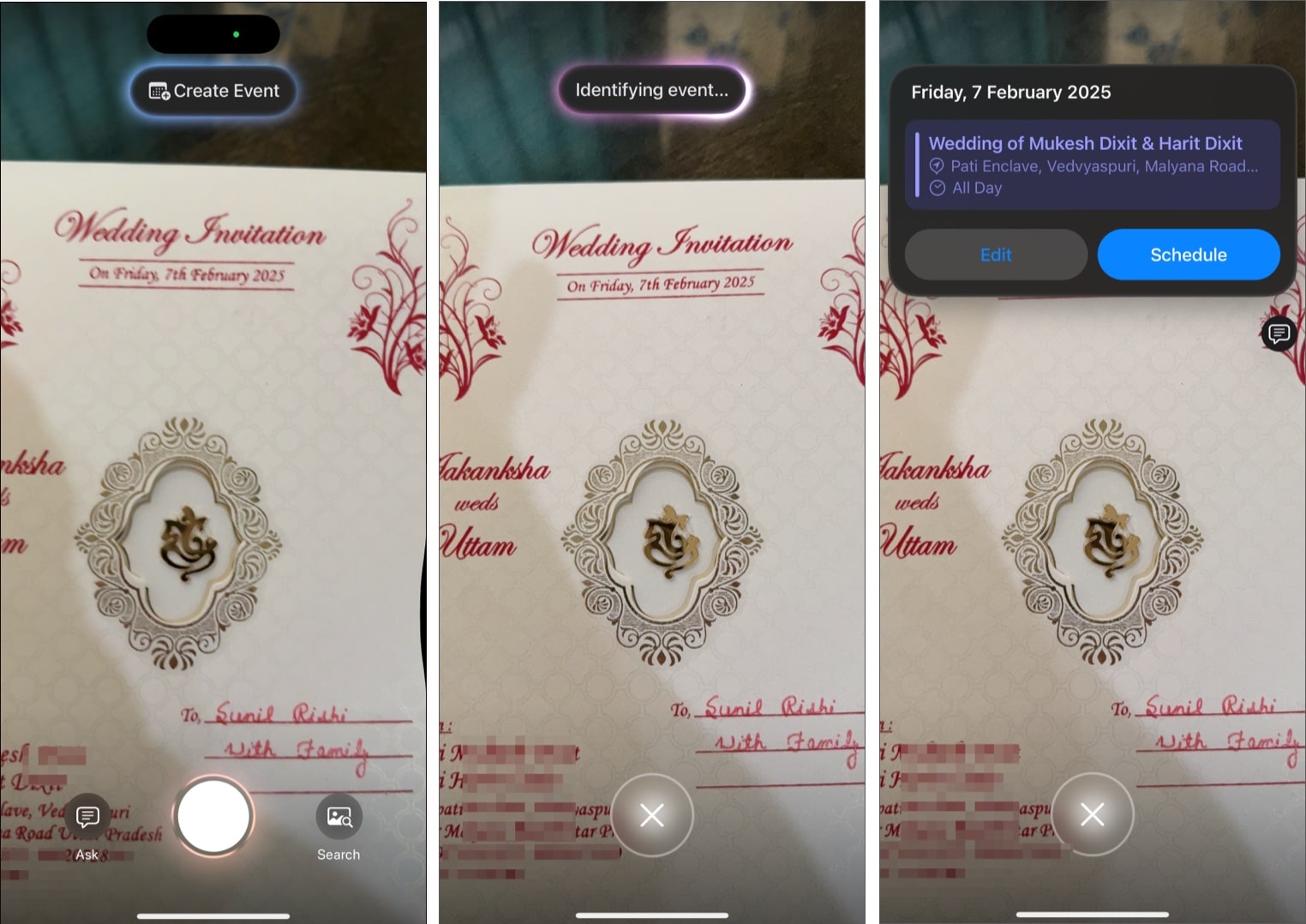

Starting with iOS 18.3, Visual Intelligence can detect event details from posters or flyers and help you add them to your Calendar.

How to do it:

With iOS 18.3 or later, identifying flora and fauna is effortless.

If your iPhone doesn’t have a Camera Control button, you can still access Visual Intelligence using the Action Button.

Once configured, long-press the Action Button to invoke Visual Intelligence and start using it just like on the iPhone 16 and 17 series.

iOS 26 introduces a major upgrade. Visual Intelligence now works with screenshots. You can circle any part of a screenshot to search or ask ChatGPT instantly.

Use this to compare prices, research images, or get more context about what’s on your screen.

Visual Intelligence redefines how your iPhone sees and understands the world. Whether you’re identifying a plant, translating signs, or using ChatGPT for instant answers, it’s a powerful new way to connect the physical and digital worlds.

Are you using Visual Intelligence on your iPhone 17? Share your favorite feature in the comments below. We’d love to hear how you’re using it.

Also read: