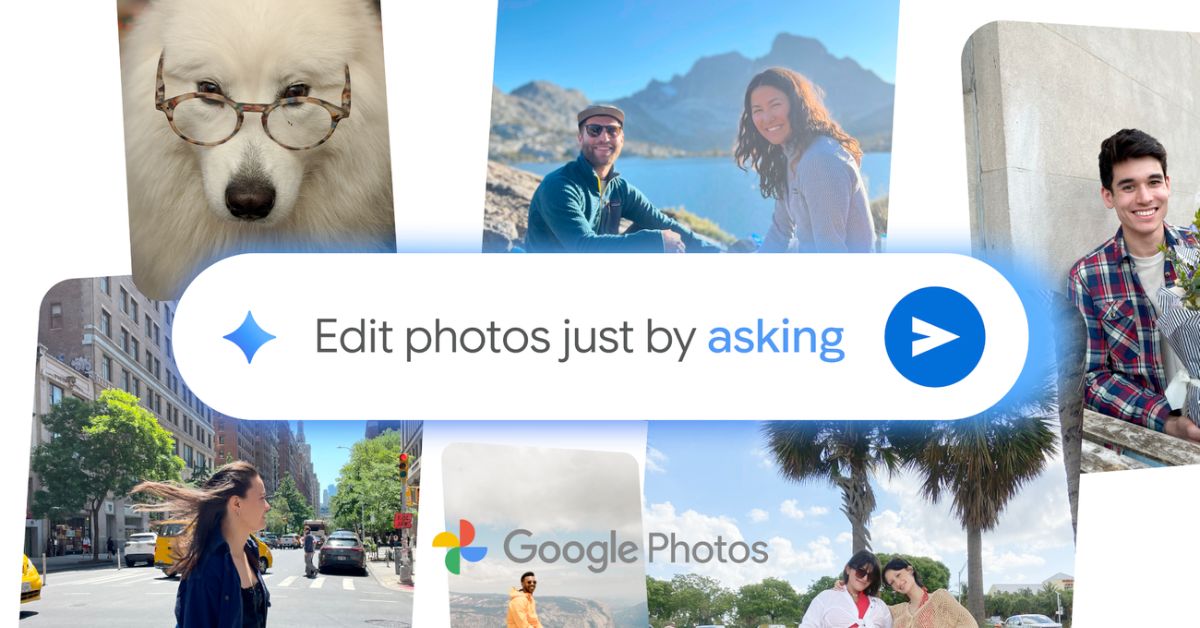

Google Photos AI Editing Lets You Transform Images With Just Your Voice

Google Photos now lets you edit images by simply asking. Powered by Gemini AI, the new conversational editor can fix, enhance, or transform photos with just voice or text commands.

- Google Photos conversational editing arrives: You can now ask for photo edits using plain language or voice, and Gemini AI handles the changes automatically.

- AI handles complex edits in one go: Gemini AI lets you describe multiple edits at once, from lighting tweaks to background swaps, without touching traditional tools.

- Creative AI-driven enhancements: Beyond fixes, you can request fun additions like sunglasses, seasonal effects, or new scenery, with context-aware interpretation of your instructions.

- Built-in transparency with C2PA credentials: Edited photos include metadata showing when and how Gemini AI made changes, helping users understand and trust the edits.

- Limited launch on Pixel 10 first: Conversational editing starts in the U.S. on Pixel 10, with a gradual rollout planned for more devices and Google Photos platforms.

Google is turning photo editing into a conversation. Alongside the Pixel 10 launch, the company unveiled a new Google Photos feature that lets users request edits in plain language instead of adjusting sliders or searching for tools. Type an instruction or speak it aloud, and the app’s AI will handle the edits automatically.

Editing by Request

The system runs on Google’s Gemini AI, which powers both basic fixes and more elaborate edits. Instead of selecting a brightness tool or manually removing objects, users can simply describe the change they want. Google gave examples like:

- “Restore this old photo”

- “Remove the cars in the background”

- “Fix the washed out colors and add clouds to the sky”

- Or the vague “Make it better”

The AI applies changes instantly and can refine results if the first attempt isn’t right. It also accepts multiple instructions in one prompt, so users could remove glare, brighten a shot, and add objects all in the same request.

This launch ties into a broader overhaul of the Photos editor, which now blends natural language with quick suggestions and gesture-based tools such as circling a distraction to erase it. Google frames the update as a way to make polished edits possible for anyone, regardless of editing knowledge.

Expanding Creative Options

The conversational approach isn’t limited to fixes. Users can request playful additions like party hats or sunglasses, change a photo’s background, or generate new elements entirely. Because Gemini interprets context, it handles multiple tools under the hood without requiring the user to manage settings or layers.

Of course, this raises the same questions that hover around AI editing broadly: how much of a photo remains authentic, and at what point does it shift into AI illustration? Google’s pitch is that it’s giving users more creative freedom while still showing where edits happened.

Transparency With C2PA Credentials

Alongside the new editing tools, Google is embedding industry-standard C2PA Content Credentials in the Pixel 10’s camera app. Google Photos will also display these credentials, showing metadata about how and when an image was captured or altered. The rollout begins with Pixel 10 devices and will gradually reach other Android and iOS devices.

These credentials join existing methods such as IPTC metadata and Google’s SynthID watermarking, creating a layered system to flag when AI is involved. How prominently those indicators will appear to casual users remains an open question.

Availability

Conversational editing debuts on the Pixel 10 in the U.S., with wider device support expected later. C2PA credential support in Google Photos will expand across Android and iOS over the coming weeks.

Google is effectively betting that making edits conversational and pairing them with visible transparency markers will reset how people think about photo editing. Instead of tweaking tools, the interaction shifts toward telling an AI what you want and trusting it to execute. Whether users embrace that shift, or push back against edits that feel less manual, may determine how quickly this style of editing spreads.

Would you actually use conversational editing, or does it feel like too much control handed to AI? Share your thoughts below.

Don’t miss these related reads:

Written by

Ravi Teja KNTSI’ve been writing about tech for over 5 years, with 1000+ articles published so far. From iPhones and MacBooks to Android phones and AI tools, I’ve always enjoyed turning complicated features into simple, jargon-free guides. Recently, I switched sides and joined the Apple camp. Whether you want to try out new features, catch up on the latest news, or tweak your Apple devices, I’m here to help you get the most out of your tech.

View all posts →More from News

macOS Will Flag the Slower USB-C Port on MacBook Neo

The MacBook Neo includes two USB-C ports with different capabilities. The left port supports faster speeds and external displays, while the right port is limited to USB 2 speeds for charging and basic accessories.

Apple Launched Studio Display and Studio Display XDR: Full Specs, Features, and Price

Apple launches Studio Display and Studio Display XDR built for creators with Thunderbolt 5, stunning visuals, and pro-level performance. Here’s all the specs, features, and price.

Apple Pre‑Orders Now Live for iPhone 17e, MacBook Neo, M5 Macs and More

Apple has opened global pre-orders for iPhone 17e, MacBook Neo, M5 Macs, and new Studio Displays. Here are the start times, products, and release date.