iOS 17 comes with a few new accessibility features that make it easier for people with disabilities to use their devices. These features include Assistive Access, Personal Voice, and Live Speech. In this blog post, we will briefly discuss each of these new iOS 17 accessibility features and understand how they can be used.

1. Assistive Access

Assistive Access is the feature for cognitive accessibility that will allow users to use the iPhone and iPad more easily and independently. It will streamline the apps’ interface and highlight essential elements, reducing cognitive load. Apple has worked closely with cognitively disabled users to design this feature accurately.

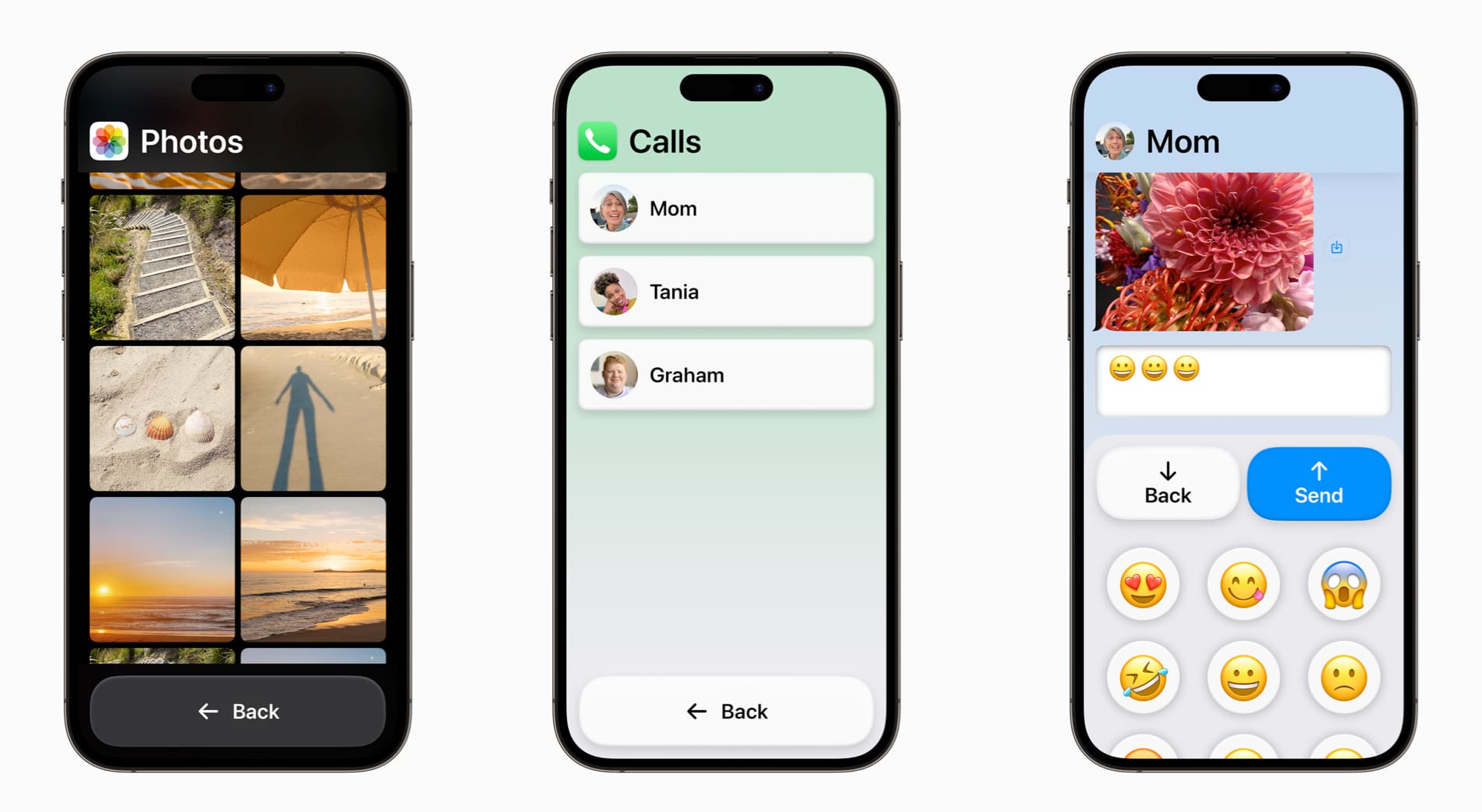

All essential apps like Camera, Photos, Music, Calls, and Messages will have high-contrast buttons, large text labels, and customizable options for individual preferences. So, whether you prefer a visual, grid-based layout or a text-based interface, Assistive Access will offer a personalized experience to enhance ease of use and independence.

Moreover, Apple has combined Phone and FaceTime into the Calls app for easy access. Users with a cognitive disability can interact visually thanks to the emoji-only keyboard and the option to record a video message to send to loved ones in the Messages app.

To learn more, check out our detailed guide on how to use Assistive Access in iOS 17 or later.

2. Live Speech and Personal Voice Advance Speech Accessibility

Apple has rolled out the Live Speech and Personal Voice feature for speech accessibility to help users at risk of losing their ability to speak.

Using Live Speech, you can type what you want to say during calls and FaceTime, and your iPhone will speak that out loud for accessible communication. Users can also save commonly used phrases for quick access during conversations.

On the other hand, you can use Personal Voice to create a synthesized voice that sounds like you. You will be required to read along with text prompts and record 15 minutes of audio on your iPhone.

After that, this voice will be integrated with Live Speech. And as always, Apple ensures the privacy of all conversations.

3. Point and Speak

Magnifier’s Detection Mode will get a new feature for vision accessibility called Point and Speak. It’s designed for users who are blind or have limited vision. When you point at any text, your iPhone will recognize that and read it aloud to assist you in interacting with real-life objects with text labels.

Point and Speak uses data from the Camera app, the LiDAR Scanner, and on-device machine learning. It will be compatible with VoiceOver and may be used with other Magnifier features like People Detection, Door Detection, and Image Descriptions to help disabled users roam around.

Follow our detailed guide to learn how to use Point and Speak in iOS 17 or later.

Cheers to empowerment!

These iOS 17 features reflect Apple’s commitment to inclusivity and empowerment of disabled users worldwide. Apple’s collaboration with disability communities ensures these features address real-life challenges while on-device machine learning protects user privacy.

Are you going to try out these features? If yes, do share your thoughts in the comments below!

Explore more…

Leave a Reply