Apple has marketed itself as one of the excellent tech companies against the likes of Facebook and Google for upholding user privacy. However, lately, the Cupertino giant has been surrounded by privacy enthusiasts challenging Apple’s CSAM prevention features.

Previously, Apple found itself stuck in the NSO debacle. It was discovered that it was easier to plant spyware in iPhones than Android.

More recently, Apple’s new features to curb the circulation of Child Sex Abuse Material (CSAM) has landed the company in a controversy. The critics are accusing Apple of devising a method that has the potential to undermine the privacy of millions.

While the efforts to limit CSAM are applaudable, its implementation, however, is what’s raising eyebrows.

What are Apple’s new child safety protection features?

On Monday, Apple intensified its efforts to prevent the circulation of CSAM on its platforms. The company introduced three new features that will be available with iOS 15 and later.

- First, Apple is adding new resources to Siri and Spotlight search to help users discover answers for CSAM related queries. This feature is entirely harmless and doesn’t change anything in terms of privacy.

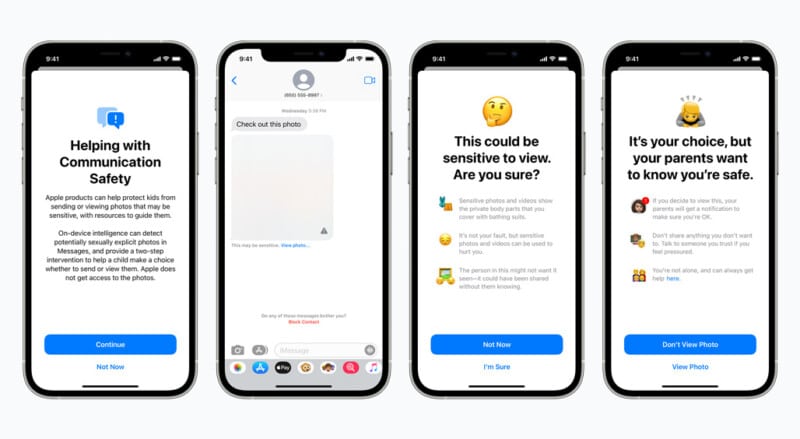

- Second, Apple changing its Messages app by adding a parental control option that will scan received and sent photos in messages for sexually explicit content for users under 18. If found, it will blur the photo in question and display a warning message alongside.

- Last and the most controversial feature is scanning the iCloud Photos library for potential CSAM.

How will the CSAM feature work?

If a user below 12 tries to view the flagged content, a notification will be sent to their parents. Notably, Apple will scan photos on-device so that it won’t know about the content.

Also, the feature to receive a notification if kids access the sensitive content is optional.

Moreover, Apple will add a feature to scan your iCloud Photos library locally for sexually explicit content and match it against CSAM data provided by the National Center for Missing and Exploited Children (NCMEC). If a match is found, it will generate a “safety voucher” and upload it to iCloud.

Once the number of these safety vouchers reaches an undisclosed threshold limit, Apple moderators can decrypt the photos and scan them for the presence of CSAM. If found, Apple could report the account to law enforcement agencies.

What does Apple say?

While the entire “scanning through iCloud Photo Library” sounds like a bold and invasive move, Apple has emphasized that the feature will not impact users’ privacy. It will specifically scan the photos uploaded to your iCloud via NeuralHash.

It is a tool that assigns a unique identifier to each photo, thus obscuring the content of the photos, even to Apple. The company has accentuated that users can disable the sync to the iCloud feature to stop any type of scanning.

Moreover, Apple’s official blog says,

Messages uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit. The feature is designed so that Apple does not get access to messages.

So, why the hullabaloo?: Critics’ side of the story

Apple is repeatedly stressing that these features are built with privacy in mind to protect children from sexual predators, but the critics disagree. While the messages feature performs the on-device scanning, Apple is inherently creating a snooping system which could have a disastrous effect.

Kendra Albert from Harvard Cyberlaw Clinic argues that:

These “child protection” features could prove costly to queers with non-accepting parents. They could get beaten and kicked out of their home. She says, for example, a queer kid sending their transition photos to friends could be flagged by the new feature.

Imagine the implications this system can have in countries where homosexuality is not legal yet. The authoritarian governments could ask Apple to add LGBTQ+ content to its list of known databases. This arm twisting of tech agencies is nothing we have not seen before.

Although, Apple has a fairly good record against such requests. For example, it firmly denied the FBI’s request to decrypt data from a mass shooter’s iPhone in 2016.

But, in countries like China, where Apple stores iCloud’s data locally, this could result in Apple acceding to their demands. Thus, endangering users’ privacy altogether.

Electronic Frontier Foundation says that:

Apple’s efforts are a “fully built system just waiting for external pressure to make the slightest change.”

The last feature is even more critical from a privacy POV. Scanning of iCloud photos is clearly a violation of user privacy. With this, your iPhone will now have a feature that can scan your photos and match them against a set of illegal and sexually explicit content.

All this while you simply want to upload your photos to cloud storage!

Apple’s past vs. present: The rising concerns

When Apple defied the FBI and refused to unlock the iPhone of a mass killer, the company famously said, “Your device is yours. It doesn’t belong to us.”

It seems that the device belongs to Apple after all because you don’t have any control over who’s watching your device’s content.

Users can argue that their clicked photos belong to them, and Apple has no right to scan them. In my opinion, it will be non-negotiable to many.

MSNBC has compared Apple’s iCloud photos scanning feature to NSO’s pegasus software to give you an idea of the surveillance system that’s being built in the name of “greater good.” The report says,

Think of the spyware abilities that the NSO Group, an Israeli company, provided to governments supposedly to track terrorists and criminals, which some countries then used to monitor activists and journalists. Now imagine those same abilities hard-coded into every iPhone and Mac computer on the plane.

It is not very hard to imagine the detrimental effects of Apple’s implementation of the feature.

Apple wants you to trust it!

Ever since the company has announced these features, privacy enthusiasts have countered them fiercely. In response, Apple has released a PDF with FAQs about its CSAM prevention initiatives.

In the document, Apple mentions that it will refuse any demands from government agencies for adding non-CSAM images to its hash list. The PDF says:

We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future.

In an interview with Techcrunch, Apple’s privacy head Erik Neuenschwander tried addressing the concerns around the features.

He said, “The device is still encrypted, we still don’t hold the key, and the system is designed to function on on-device data. What we’ve designed has a device-side component — and it has the device-side component by the way, for privacy improvements. The alternative of just processing by going through and trying to evaluate users data on a server is actually more amenable to changes [without user knowledge], and less protective of user privacy.”

As it seems, Apple wants you to trust it with your phone’s private contents. But who knows when the company turns back on these promises.

The existence of such a system that snoops upon the content you solely own before it is encrypted is opening a can of worms. Once they have manufactured the consent and have set a system in place, it would be tough to restrict it.

Do benefit overshadow risks of Apple’s new child safety features?

While Apple’s efforts are great to combat child sexual abuse, it cannot be an excuse to eye your data. The very existence of a surveillance system creates a possibility of a security backdoor.

I believe that transparency is the key for Apple to achieve the middle ground. Some sort of legal involvement could also help the company gain trust in its plan to disrupt CSAM on the internet.

Nonetheless, introducing this system defeats the entire purpose of encryption. The company could start a wave of experiments that could potentially prove fatal for privacy in the future.

More importantly, it’s starting from Apple, the last company everyone expected this from. It’s true that you either die a hero or you live long enough to see yourself become the villain.

Read other editorials:

Leave a Reply